On October 28, 2021, the Federal Trade Commission (FTC) put out an enforcement policy statement, which warned businesses against using dark patterns to trap or trick consumers into subscription services.

So what does this mean for you?

Here's more information on the FTC's announcement, as well as information on relevant issues concerning dark patterns, the GDPR and other privacy laws.

There are two main reasons why you need a Privacy Policy:

✓ Privacy Policies are legally required. A Privacy Policy is required by global privacy laws if you collect or use personal information.

✓ Consumers expect to see them: Place your Privacy Policy link in your website footer, and anywhere else where you request personal information.

Generate an up-to-date 2024 Privacy Policy for your business website and mobile app with our Privacy Policy Generator.

One of our many testimonials:

"I needed an updated Privacy Policy for my website with GDPR coming up. I didn't want to try and write one myself, so TermsFeed was really helpful. I figured it was worth the cost for me, even though I'm a small fry and don't have a big business. Thanks for making it easy."

Stephanie P. generated a Privacy Policy

- 1. What are Dark Patterns?

- 2. Why Dark Patterns Matter

- 3. How Common are Dark Patterns?

- 4. Categories of Dark Patterns

- 4.1. Deceptive Design

- 4.2. Forced Engagement

- 4.3. Rewards and Penalizations

- 4.4. Positive vs. Negative Wording

- 5. The GDPR and Dark Patterns

- 6. U.S. Law and Dark Patterns

- 6.1. The CCPA (CPRA) and Dark Patterns

- 6.2. FTC Compliance Requirements for Dark Patterns

- 7. Summary

What are Dark Patterns?

Essentially, dark patterns are an underhanded, manipulative way to get people to do what you want them to on your company's website.

Typically, this involves a sign-up process and the collection and use of a consumer's personal information without their consent. They might also be used to get your website's visitors to click on an ad that they would otherwise ignore.

The term "dark patterns" entered the English language in 2010 when Harry Brignull, a user experience researcher in the UK, coined it to describe deceptive practices used to manipulate a company's customers.

The questionable things Brignull noticed included user interfaces that had been made specifically to deceive people into doing things, like buying insurance with their purchase or "signing up for recurring bills."

Of course, none of those practices are exactly new. Many online businesses have employed them practically since the Internet began. Unethical, scammy business people have used them in other iterations almost since humans invented commerce.

Some dark patterns are more subtle than others, but all of them are designed to exploit the fact that many people don't read the fine print when they're online. And even if they did, the language used in dark patterns is often deliberately confusing or misleading.

With that said, most dark patterns have a few things in common. For instance, they may:

- Omit or downplay essential information

- Hide the total price of a product or service

- Use confusing or deceptive language

Why Dark Patterns Matter

Dark patterns are sneaky and often go undetected by the average person. They play upon cognitive biases that we all have without realizing it.

Unethical companies can use them to exploit customers, specifically by manipulating them into making decisions that they otherwise wouldn't. This is unfair, and it can have severe consequences for people tricked by dark patterns.

In some cases, dark patterns can even be illegal. For example, if a company uses a dark pattern to trick someone into buying something they don't want or need, that could be considered fraud.

Dark patterns also matter because they can hurt the overall user experience of the internet. When companies use dark patterns to manipulate their customers, it makes the internet a less enjoyable and trustworthy place for everyone.

How Common are Dark Patterns?

With the FTC making declarations about new enforcement policies against the use of dark patterns, you would think that many companies are using them.

You'd unfortunately be correct.

Dark patterns are quite common. They're used in various industries, from online retail to advertising. And they come in all shapes and sizes.

An international study published in 2019 discovered that out of 5,000 privacy notifications sent out by a slew of companies across Europe, more than half used dark patterns. Moreover, only 4.2 percent gave their customers a choice regarding consent or delivered more than simple confirmation that a customer's data would be collected.

Here are some examples of typical dark pattern usage:

- Pre-ticked checkboxes: You know the ones. They're the checkboxes already checked for you when you visit a website or sign up for a service. The assumption is that you'll leave them without bothering to uncheck them. But often, these boxes are for things like opting into email newsletters or agreeing to receive marketing materials. Note that these are not allowed under the GDPR.

- Misleading buttons: This is when a button says one thing but does another. A classic example is a "Cancel" button that doesn't actually cancel your subscription or a "No Thanks" button that signs you up for something anyway.

- Disabled links: Have you ever tried to click on a link only to find that it's disabled? Just think how exasperating it would be to click on a link to close out a popup only to find it doesn't do anything at all. Thus, you'd have to either do what the popup asks you or close your browser tab altogether.

- An app that requires users to share contacts.

- An app that requires social media account information before it will function.

- An app that requires a user's physical location before functioning.

- A website user is forced to sign up for a newsletter before moving on to a service.

- A search engine employs default settings that track and monitor a user's input without letting the user know it does so.

The legal definition of dark patterns is still in flux, but lawmakers are increasingly taking notice of these manipulative user interfaces, as can be seen by the FTC's announcement.

As privacy legislation continues to be updated, it will be necessary for companies to stay aware of the definition of dark patterns and how they can avoid using them in their own designs.

Categories of Dark Patterns

Dark patterns can broadly be divided into three categories:

Deceptive Design

This is when a company uses misleading design elements to trick users into taking actions they wouldn't otherwise take, such as providing false information or hiding essential details.

Another example of deceptive design might be to use default settings. Service providers know that many people do not change the settings on their devices, so they purposefully create defaults that collect as much information as possible. In fact, only about five percent of all people attempt to change the basic, manufacturer-installed settings at all.

The default privacy settings of a digital product, device, etc. can be unbelievably powerful. For example, a study about organ donation found the following:

In general, there are two kinds of policies regarding consent collection. There is explicit opt-in consent, and there is presumed consent. The former requires that an individual physically check a box on a paper or digital form before becoming a donor. The latter assumes that an individual has agreed to organ donation by default.

In countries that use presumed consent, a lot more people are organ donors.

These dark patterns are often used because they exploit the fact that users don't read all of the text on a website before taking action. By pre-selecting specific options or burying critical information in fine print, companies can take advantage of users who don't know any better.

Forced Engagement

This occurs when a company uses manipulative techniques to keep users engaged with their product, even if they want to stop using it. For example, a company might make it difficult for users to cancel their subscriptions or continue bombarding them with notifications after unsubscribing.

While it's certainly understandable that companies want to increase their sales, these coercive tactics can often backfire. Customers are more likely to resent being forced into a decision and may even take their business elsewhere.

In addition, if customers feel like they're being taken advantage of or manipulated, they're less likely to trust the company in the future.

Rewards and Penalizations

Another manipulative practice is rewarding customers for making a decision the company wants them to make and penalizing them for refusing.

For example, rewards can take the form of discounts, making certain items or services free, or adding on additional features to an app.

On the other hand, punishments for not doing what the company wants might be something like refusing to allow customers to browse the company's website until they accept the use of "all cookies," including those that are used to track the customer for marketing purposes.

Positive vs. Negative Wording

One typical dark pattern is the use of negative wording to make users feel as if they're missing out on something. For example, a company might say, "Don't miss out! Subscribe now!" or "Hurry! Offer ends soon!" This creates a feeling of urgency and pressure that can coerce users into taking an action they may not have otherwise considered.

Another dark pattern that's becoming increasingly common is the use of popups and banners. While these can effectively get a user's attention, they can also be extraordinarily intrusive and cause users to leave a website altogether.

In some cases, as already mentioned, companies will even prevent users from closing a popup or banner unless they take the desired action.

One company that was fined for using these types of dark pattern categories is Fareportal. The state of New York investigated the company and found it was guilty of "systematic deception tactics" between 2017 and 2019.

One of its most significant transgressions was creating false urgency concerning the availability of hotel accommodations and flight seating.

For instance, if someone searched for three tickets, they would see "only 4 tickets left." In the same way, consumers would see a comparable message when searching for available hotel rooms.

The GDPR and Dark Patterns

The General Data Protection Regulation (GDPR) is a set of regulations that member states of the European Union and those who do business in the EU must adhere to in order to protect the privacy of digital data.

One of the key provisions of the GDPR is the requirement that businesses must obtain the clear, informed, and active consent of users when getting them to opt in.

For instance, the GDPR's definition of consent is:

"any freely given, specific, informed and unambiguous indication of the data subject's wishes by which he or she, by a statement or by a clear affirmative action, signifies agreement to the processing of personal data relating to him or her."

To comply with this provision, businesses cannot suppose that "Silence, pre-ticked boxes or inactivity ... constitute consent."

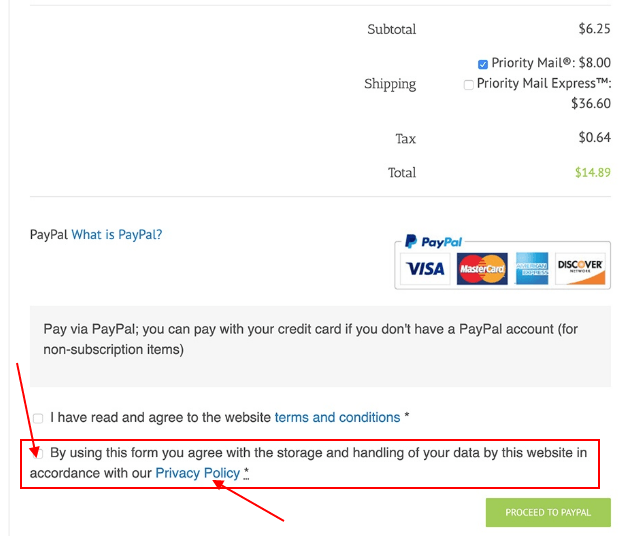

Here's an example of a consent form that would meet the GDPR's requirements:

The GDPR's provisions also give individuals the right to opt out of having their personal data collected and used for certain purposes.

You can see how these requirements work to counter the allowing of dark pattern techniques that would be intrusive and violate the privacy rights granted under the GDPR.

U.S. Law and Dark Patterns

The FTC along with other law enforcement agencies have taken significant steps to protect consumers from being scammed by unscrupulous sellers in the subscription marketplace for a while now.

Most of these actions focus on taking on various schemes that unscrupulous sellers use to manipulate consumers into purchasing or participating in such things as free-to-pay conversions, prenotification plans, or continuity plans.

However, there hasn't been a single federal law that included the regulation of dark patterns. The U.S. government has largely left that to the individual states, such as California.

The CCPA (CPRA) and Dark Patterns

The California Consumer Privacy Act (CCPA) gives California residents the right to know what personal information is collected about them, how it's used, and with whom it's shared. But what a lot of company owners don't know is that the CCPA also contains provisions against dark patterns.

The law was amended, and in addition to banning the use of dark patterns, the amended regulations also clarified that businesses must disclose their data retention practices and contact information for consumers who want to use their rights under the CCPA/CPRA.

In protecting California consumers, former California Attorney General Xavier Becerra stated in one of his press conferences as the state's highest law enforcement official, "these protections ensure that consumers will not be confused or misled when seeking to exercise their data privacy rights."

The amendment provides companies with the following guidance about what they must and must not do vis-a-vis dark patterns:

- The business must make it easy for consumers to exercise their right to opt out of the sale of their personal information. This means that the number of steps for submitting a request to opt out cannot be more than the number of steps for submitting a request to opt in.

- A business cannot use confusing language, such as double negatives (e.g., "Don't Not Sell My Personal Information"), when giving consumers the choice to opt out.

- Businesses must not demand that consumers click through or listen to arguments why they should not submit a request to opt-out before verifying their request.

- Businesses must take steps to ensure that the process for submitting a request to opt-out is easy and does not require the consumer to provide unnecessary personal information.

- Businesses shall not demand that the consumer search or scroll through the text of a webpage, Privacy Policy, or similar document to locate the mechanism for submitting a request to opt out.

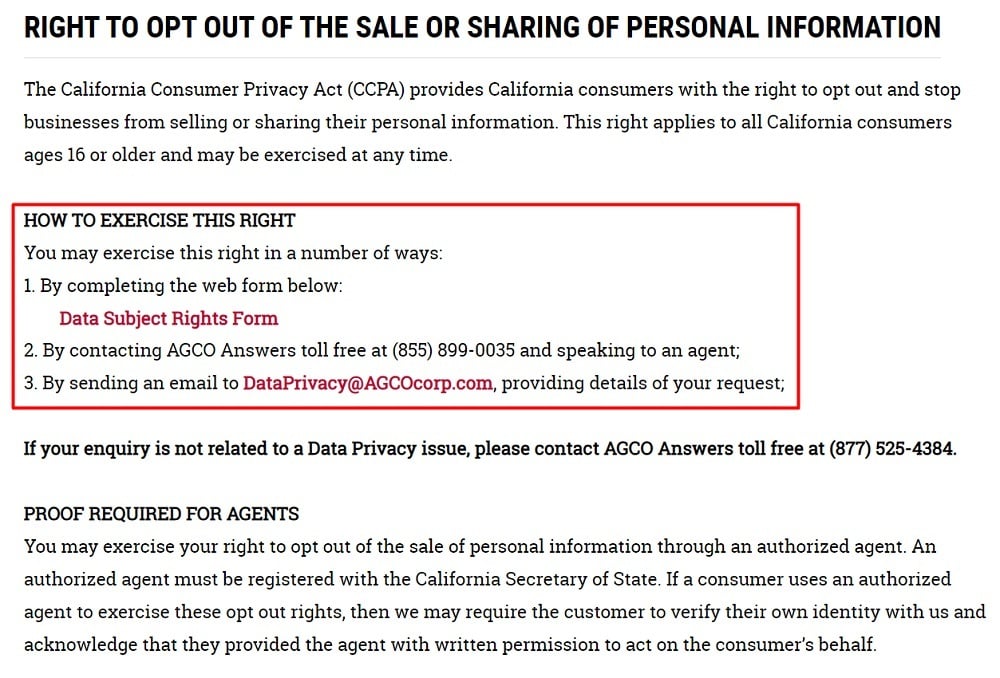

Here's an example of how to note to users that they have the right to opt out, and how to do it:

The California Attorney General clearly expressed that businesses using dark patterns in violation of the CCPA/CPRA will be held accountable. However, companies have a 30-day cure period to make the necessary changes to their website or app design.

FTC Compliance Requirements for Dark Patterns

The FTC has put in place three main requirements that businesses must follow to ensure they are compliant with the law. These are:

- Businesses must disclose all material terms of a transaction, including information such as how much the product or service costs, when the consumer must act to stop further charges, and how to cancel.

- Businesses must obtain express informed consent from consumers before charging them for a product or service. This includes getting the consumer's approval and not including information that interferes with, detracts from, contradicts, or otherwise undermines the consumer's ability to provide their express informed consent, and

- Businesses need to make it simple for consumers to cancel. Cancellation mechanisms should be simple to use and understand. The procedure for cancellation should be as simple as the process for signing up for a service.

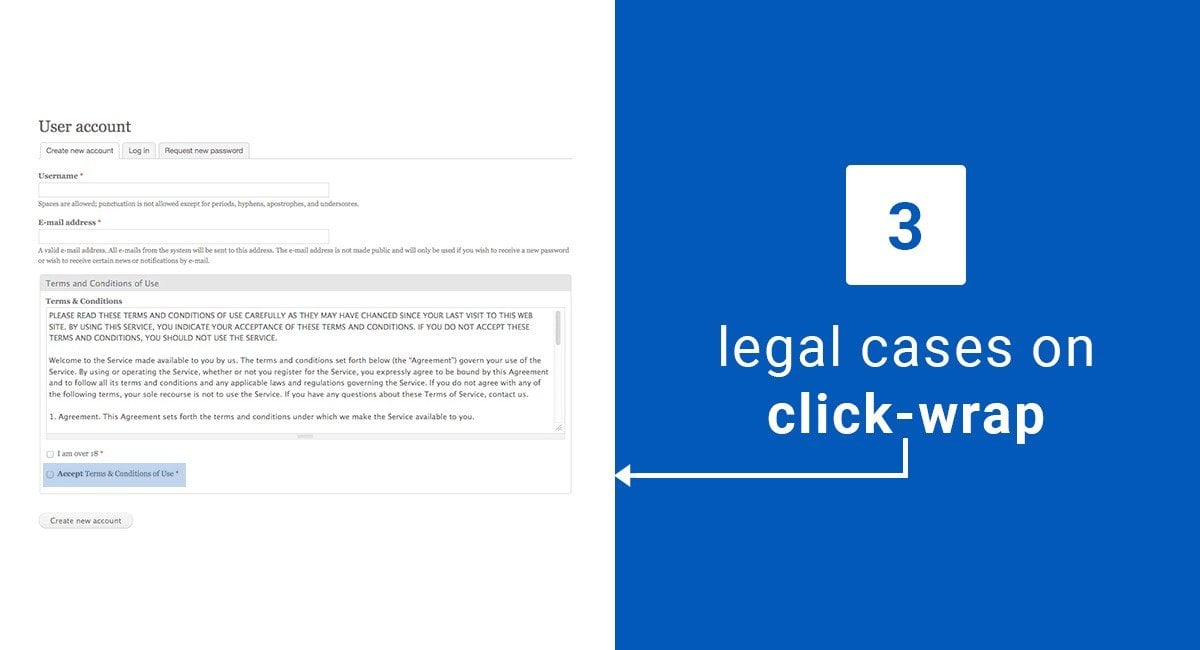

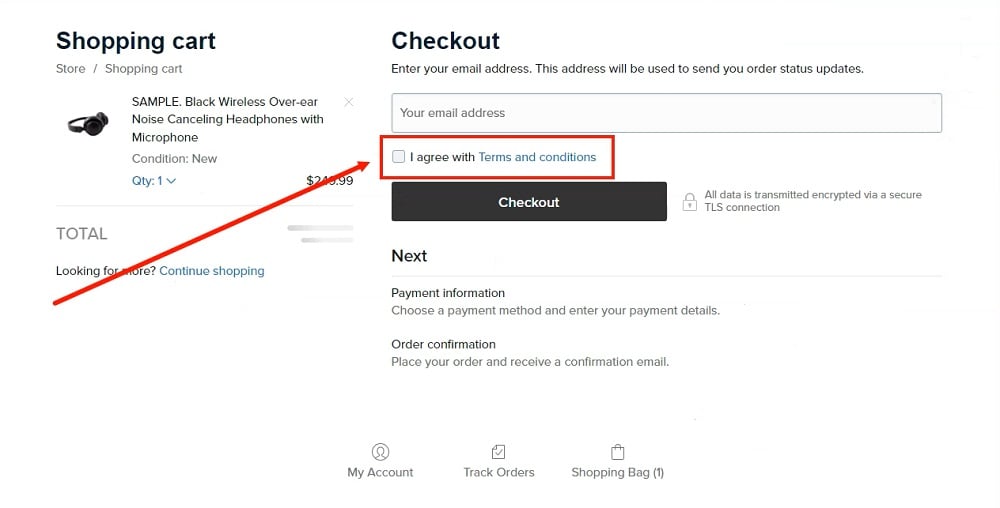

You can get informed consent by using a checkbox next to a statement that shows checking the box equals consent or agreement. Here's an example of this used at a checkout page:

Here's an example of a simple cancellation method that can be used for newsletters to allow easy unsubscribing as well as managing of subscriptions:

By making it clear what businesses can and cannot do with dark patterns and providing a way for consumers to opt out of having their personal data collected and used for specific purposes, the FTC hopes to see a reduction in the overall use of dark patterns.

Summary

Many companies are willing to bend or break the law in order to gain an edge over their competitors. This leaves ethical business owners at a disadvantage. However, business owners can build trust with their customers and create a competitive advantage by adhering closely to data privacy laws such as the GDPR, CCPA, and the FTC's guidance.

The Federal Trade Commission's actions make it clear that they are serious about protecting consumers and ensuring that businesses play by the rules. The agency's recent statement clearly indicates that it will be taking a more aggressive stance when it comes to enforcing compliance.

As the regulatory landscape around data privacy continues to evolve, businesses should remain aware of the design elements that could be considered dark patterns.

With a particular focus on features that collect personal information or attempt to obtain user consent, being aware of these tactics can help your business assess and manage compliance risk.

In addition, brands should carefully review their own recurring billing and subscription programs in light of this policy and take steps to ensure that they comply with all applicable laws and regulations.

Some of these steps might be to allow key user interface areas or information collection mechanisms to be reviewed by neutral personnel or third-party auditors. This will help ensure that the business follows through on its promises to protect the privacy of its customers.

Finally, businesses should keep an eye on any future FTC enforcement actions in this area, as they may provide further guidance on how best to comply with the law.

Remember that ignorance of the law is no excuse, so don't take any chances - protect your company and your customers by following the FTC's lead.

Comprehensive compliance starts with a Privacy Policy.

Comply with the law with our agreements, policies, and consent banners. Everything is included.