About

Our easy-to-read ebook will help your business understand and navigate what it takes to be compliant with the GDPR.

On this page

- 1. How the GDPR Affects Your Online Business/Online Presence

- 2. Data Protection By Design and By Default

- 3. Legitimate Purposes for Data Collection

- 4. Minimize Data Collection

- 4.1. Anonymization of Data

- 4.2. Pseudonymization or Encryption of Data

- 4.3. Storage Limitation and Log Files

- 4.4. Centralized Management and Storage of Data

- 4.5. Lawful Basis for Processing Data

- 4.5.1. The Purpose Test

- 4.5.2. The Necessity Test

- 4.5.3. The Balancing Test

- 4.6. Working with Third Parties

- 4.7. Google EU User Consent Policy

- 4.8. Analytics

- 4.9. App Development Platforms

- 4.10. App Distribution Platforms

- 4.11. Advertising Tools

- 4.12. Email Marketing Services

- 4.13. Lead Generation

- 4.14. Enterprise Mobility Management

- 4.15. Voice Activation

- 5. First Steps to GDPR Compliance

- 5.1. New Projects

- 5.1.1. Are you the data controller or data processor?

- 5.1.2. Privacy by Design

- 5.1.3. The Benefits of Starting Fresh

- 5.2. Updating Current Projects

- 5.2.1. Purge Non-Essential Data

- 5.2.2. Deduplicate Data

- 5.2.3. Limit Data Access

- 5.3. Your Privacy Policy

- 5.4. Consent Checkboxes

- 5.5. Other Responsibilities

- 6. Note From the Editors

How the GDPR Affects Your Online Business/Online Presence

So far we've looked at the substance of the GDPR and how it applies in theory. Now we'll look in detail at how the law applies to online businesses, and consider the practical steps you can take to ensure you're compliant.

Firstly a reminder - with a few exceptions (such as the requirement to keep data processing records) the GDPR doesn't discriminate in terms of company size. It applies to everyone from huge multinational conglomerates like Google, all the way down to lone bloggers who aren't even pursuing a profit.

The GDPR doesn't care who you are. Its focus is on what you're doing.

Business websites and apps can process a lot of personal data, and sometimes they do so without even really thinking about the implications.

Let's consider the ways in which a website or app might be collecting personal data:

- Analytics

- Cookies

- Checkout pages

- Contact forms

The GDPR refers specifically to "online identifiers" as an example of personal data. We have to look to the EU's guidance and case law for an understanding of the implications of this.

For example, one such online identifier is the IP address. There are two cases from the EU's Court of Justice that confirms that an IP address falls under the scope of EU privacy law.

The first case was Scarlet Extended. Here's an excerpt of what the Court said:

26 Lastly, Scarlet considered that the installation of a filtering system would be in breach of the provisions of European Union law on the protection of personal data and the secrecy of communications, since such filtering involves the processing of IP addresses, which are personal data.

27 In that context, the referring court took the view that, before ascertaining whether a mechanism for filtering and blocking peer-to-peer files existed and could be effective, it had to be satisfied that the obligations liable to be imposed on Scarlet were in accordance with European Union law.

The second was Breyer v Germany, in which the Court said that the definition of personal information can extend to include even dynamic IP addresses.

The principles of the GDPR need to permeate through every stage of your online business. This sounds like a lot of work. It might be - but you may find you're already applying a lot of what the GDPR requires. And once you've done the required work, you'll be left with a safer, cleaner and more transparent set of practices. This is a good thing in itself.

Data Protection By Design and By Default

A key concept in the GDPR is set out at Article 25 - "data protection by design and by default."

Data protection by design means creating systems in such a way builds in the principles of the GDPR. This includes ensuring any areas where personal data is processed are adequate and secure.

Data protection by default means minimizing the amount of personal data that is processed and always choosing the least intrusive methods. Where there is a choice between processing a little bit of personal data or a lot of personal data, the default setting should be the lowest.

Here are some examples provided by the European Commission:

Data protection by design

The use of pseudonymisation (replacing personally identifiable material with artificial identifiers) and encryption (encoding messages so only those authorised can read them).

Data protection by default

A social media platform should be encouraged to set users' profile settings in the most privacy-friendly setting by, for example, limiting from the start the accessibility of the users' profile so that it isn't accessible by default to an indefinite number of persons.

These principles are dealt with throughout this book. We're now going to take a look at how they apply to a specific aspect of online business.

Legitimate Purposes for Data Collection

Under Article 5 (1)(b) of the GDPR, and as discussed in Chapter 5, personal data can only be collected for "specified, explicit and legitimate purposes." This means that data controllers must only collect personal data, by whatever means, when they have a good reason for doing so.

How is this relevant to online businesses? By way of example, let's imagine a scenario where a website might infringe this principle.

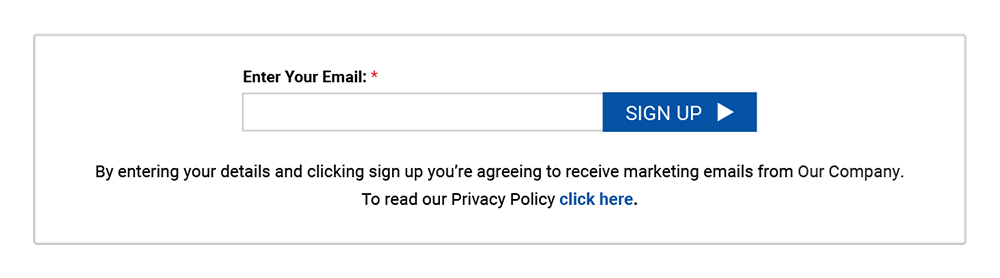

A form captures email addresses in order to sign users up to a mailing list:

The form is GDPR-compliant in that it makes the purposes of collecting the personal data clear: to receive marketing emails.

In its Privacy Policy, the data controller can explain to its users how long it will retain their email address data, and how users can withdraw consent or request the data deletion. It makes no mention of any other use of the email addresses except for its own marketing purposes.

Now imagine the company starts sharing these email addresses with third parties so the third parties could start sending marketing emails to the individuals. This would be a problem.

The users didn't consent to this, nor were they informed about it. This is processing personal data in a way that is not consistent with the purposes for which it has been collected.

The data controller would need to change the text in the box above to say something like "...you're agreeing to receive marketing emails from OKA and third parties that OKA selects."

It would also need to update its Privacy Policy to include a clause that lets users know that email addresses may be shared with third parties, and for the purpose of marketing.

Minimize Data Collection

Your website is likely to collect a lot of information from users in a number of different ways. Some ways are more overt like with an email address sign-up form, but some may be less detectable, if detectable at all.

For example, referral data (or "referrer data") is collected in logs and provides information about how a user arrived at the site. This type of log data might show, for example, the details of the site from which a user clicked through. This can be helpful in, for example, determining the effectiveness of affiliate marketing campaigns, personalized advertising campaigns and even analytics purposes.

In addition to requiring that personal data is only collected in connection with a specified purpose, at Article 5 (1)(c) the GDPR requires that any personal data processed is "relevant and limited to what is necessary" for fulfilling those purposes.

Your business will need to consider the principle of data minimization when determining how your business website collects data. And remember that any data about a person's web browsing activity can be personal data insofar as it relates indirectly to an identifiable person.

There are many people who would guard their web browser history more closely than their credit card information. The fact that referrer data only provides information about the previous website a user visited does not negate the requirement to treat this information as personal data. This could be very private information.

In light of this, it's clear why certain web browsers, such as Firefox, provide the option to prevent the sending of referral information by disabling the Referer header in an HTTP request.

Anonymization of Data

Where personal data, such as IP addresses, is collected in log files, this data should be anonymized whenever possible. One personal data is anonymized, and any identifiers have been irreversibly erased (not simply masked) it's no longer capable of leading to the identification of an individual. It is therefore no longer personal data, and the GDPR doesn't apply to it.

This is confirmed by guidance from the UK's Data Protection Authority, the Information Commissioner's Office (ICO) (at page 6 of the linked PDF):

- Data protection law does not apply to data rendered anonymous in such a way that the data subject is no longer identifiable. Fewer legal restrictions apply to anonymised data.

The Article 29 Working Party, however, does note that anonymized data is still covered by certain privacy law such as the ePrivacy Directive.

It is clear that it's in a data controller's interests to ensure that personal data is anonymized.

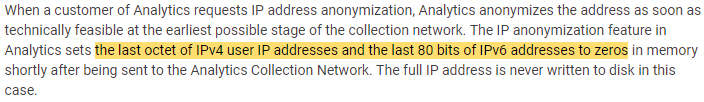

Let's look at how the identifier most commonly found in log files, an IP address, can be anonymized. A common suggestion, that comes both from the ICO and from IntArea, is to remove certain octets of an IP address to render it anonymous.

This is the strategy employed by Google Analytics in order to anonymize IP addresses:

When a customer of Analytics requests IP address anonymization, Analytics anonymizes the address as soon as technically feasible at the earliest possible stage of the collection network. The IP anonymization feature in Analytics sets the last octet of IPv4 user IP addresses and the last 80 bits of IPv6 addresses to zeros in memory shortly after being sent to the Analytics Collection Network. The full IP address is never written to disk in this case.

As noted, this occurs before the IP address is ever recorded:

IntArea actually advocates going further than this, suggesting that the last two octets of an IPv4 address are removed. This would turn "12.214.31.144," in Google's example, into "12.214.0.0."

Pseudonymization or Encryption of Data

If log files can't be anonymized and must contain personal data, any identifiers within the files should be pseudonymized or encrypted. Remember that this extends to dynamic IP addresses. There may be a good reason for retaining personal data in logs, but it must be treated carefully.

Pseudonymized or encrypted personal data in log files is still subject to all the safeguards of the GDPR. Don't be like AOL. In 2006, AOL released a database of search logs attributable to 650,000 users. AOL had taken certain measures to pseudonymize the data by replacing each user's login with a numerical code. This was not enough to prevent some of the users being personally identified when the data was made public.

The act of de-identification, whether by pseudonymization or any method of encryption, requires balancing the usability and accessibility of the data against the level of security. If personal data must be retained logs, it should remain accessible in case it is subject to a rights request from the user.

Here's an example.

A website logs data about a user's activities. This includes data about the occasions on which that user accessed the site and records of form entries. The personal data needs to be secure enough to limit the amount of damage that would be caused in the event of a security breach. But it would also need to be accessible enough to allow a staff member (with the appropriate access permissions) to retrieve the data and present it to the user in the event of a subject access request.

Equally, if personal data is retained in log files, it must be because there is some operational reason for it to remain accessible. Where log data is pseudonymized or encrypted and can be decrypted via a key, this key must be kept securely and in a separate location from the pseudonymized or encrypted log data. Companies must only allow very privileged access to anything that enables the interpretation of personal data stored in log files.

Think of pseudonymization or encryption techniques as a lock on the front door. The lock can be extremely effective. But if the key is left under the mat, it won't be hard for someone to break in.

It should be clear that, for this reason among many others, the collection of personal data in the log files should be kept to a minimum.

Storage Limitation and Log Files

At Article 5 (1)(e), the GDPR states that personal data must not be stored for a period longer than is necessary. This is known as the principle of storage limitation.

How long is it really necessary for you to store log data? IntArea suggests that IP addresses in server logs are erased after three days. Unless they really need to be kept for longer, there's no downside to pruning log files regularly:

- It means there is less data sitting around, thus reducing the risk of a harmful breach.

- It saves storage space. Log files can get bloated quickly.

- There will be no requirement to provide this information to a user in the result of a subject access request, or find it and delete it in the result of an erasure request, etc.

A utility such as logrotate can help you manage your log files. It can be used to automatically delete log files and "shred" them by overwriting them a specified number of times. This should ensure that log files are not readable post-deletion.

Centralized Management and Storage of Data

In the context of the GDPR, there are two good reasons to have a centralized system for managing and storage of log data:

- It allows for more efficient detection and analysis of security issues

- It helps with the facilitation of data subject rights requests

The National Institute of Standards and Technology (NIST) produced some guidance on log management that advocates implementing a centralized system. Bear in mind that this guidance is quite old, having been written in 2006, but it provides some valuable insight into the principles of log management.

The importance of the effective management and accessibility of log files is highlighted by this story of a Facebook user who submitted a subject access request. Facebook was unable to facilitate the request to provide sensitive log data to an individual user because of the way in which the data is indexed and stored in its logs. This led to the user lodging a complaint with Ireland's Data Protection Authority.

Doing the necessary work to ensure that logs are well-indexed and centrally accessible (in a secure way, by people with appropriate clearance) could prevent serious problems in the long term.

Lawful Basis for Processing Data

As mentioned in other chapters, all processing of personal data must take place under one of the six lawful bases. Let's consider how the lawful bases might apply to the processing of IP addresses in logs. Remember that this needs to be considered even if IP addresses are pseudonymized or encrypted.

Under certain conditions, it is possible to process someone's personal data where you have a legitimate interest in doing so. This is established in Article 6 (1)(f). Where there is a legitimate interest in processing someone's personal data in a particular way, there is no need to request the person's consent.

A Legitimate Interests Assessment is required to establish legitimate interests as a lawful basis for processing. The ICO suggests a particular format for this assessment known as the "three-part test":

- The purpose test (identify the legitimate interest);

- The necessity test (consider if the processing is necessary); and

- The balancing test (consider the individual's interests).

Let's apply this to the storing of IP addresses for security reasons.

The Purpose Test

Can there be a legitimate reason why a developer or web admin might need to log IP addresses? One potential reason is for security purposes, e.g. in order to detect and guard against denial of service (DoS) attacks.

In the case of Breyer v Germany the EU's Court of Justice decided that the storing of IP addresses for security purposes can, in theory, constitute a legitimate interest.

This is also reflected in Recital 49 of the GDPR, which states that:

"The processing of personal data to the extent strictly necessary and proportionate for the purposes of ensuring network and information security [...] constitutes a legitimate interest [...]"

So, storing IP addresses for security reasons passes the purpose test.

The Necessity Test

"Necessity" is defined in quite a broad way by the ICO in this instance. Here are some of the questions that the ICO suggests considering:

- Will the processing actually help you achieve your purpose?

- Is the processing proportionate to that purpose, or could it be seen as using a sledgehammer to crack a nut?

- Can you achieve your purpose without processing the data, or by processing less data?

- Can you achieve your purpose by processing the data in another more obvious or less intrusive way?

There could be various technical justifications for logging IP addresses for security purposes. If a website is being repeatedly spammed in a DoS attack, an examination of the logs could help identify the origin of the attack.

If it's possible to meet the security needs of a website or app in a way that does not involve processing personal data, then this will almost always be preferable, unless it involves a disproportionate amount of extra effort.

Assuming there is no viable alternative, processing IP addresses for security reasons passes the necessity test.

The Balancing Test

Finally, the balancing test requires consideration of the following according to the ICO:

- the nature of the personal data you want to process;

- the reasonable expectations of the individual; and

- the likely impact of the processing on the individual and whether any safeguards can be put in place to mitigate negative impacts.

This is a matter of balancing the risks and the privacy rights of the individual against the legitimacy and necessity of the processing of their personal data.

While it is technically feasible to identify someone even from a dynamic IP address, this is fairly low-risk personal data.

But the context is important. A list of IP addresses that have accessed a dating app or adult website is more sensitive than a list of IP addresses that have accessed an online grocery store.

As long as IP addresses are pseudonymized or encrypted then it's most likely that this act of processing personal data passes the balancing test.

If you can pass all three tests, this means it's not normally necessary to ask for permission (consent), or wait until someone's life depends on it (vital interests), in order to be allowed to collect and store IP addresses for security purposes.

Bear in mind if you wish to rely on legitimate interests, you'll need to conduct this assessment and relate it to your own specific data processing requirements.

Working with Third Parties

Businesses rarely build absolutely everything in their websites from the ground up. They will almost always use some third parties throughout the development, maintenance and distribution processes of their sites.

Compliance with the GDPR is a requirement both when:

- Integrating third-party services into a project - for example, running analytics software on a website, or

- Integrating a project into third-party services - for example, distributing an app through a mobile marketplace

Software or service providers who process personal data for, or on behalf of, their customers will want those customers to comply with privacy law. These third parties will often specify this in their Terms and Conditions. Sometimes they also make specific demands about how their customers comply with privacy law.

In this section, we're looking at these requirements. The key takeaway from this section is that developers must be careful to read the Terms & Conditions of any third party companies they use. These Terms & Conditions can have specific instructions about the privacy practices of developers in building their website, app or other software.

Using third-party services also has other legal implications that are discussed more generally throughout this book.

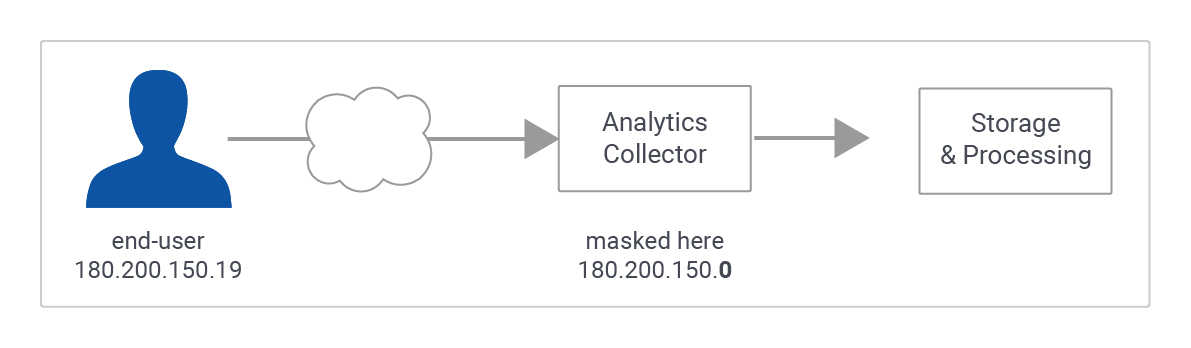

Google EU User Consent Policy

Google products are practically ubiquitous across the web, and many developers use them in producing websites and apps. We're going to look at the specific requirements of some of these products below. But first, it's worth noting that Google has a blanket EU User Consent Policy that applies across many of its products.

Let's take a look at what Google's EU User Consent Policy requires:

EU user consent policy

If your agreement with Google incorporates this policy, or you otherwise use a Google product that incorporates this policy, you must ensure that certain disclosures are given to, and consents obtained from, end users in the European Economic Area. If you fail to comply with this policy, we may limit or suspend your use of the Google product and/or terminate your agreement.

First Google says that its EU User Consent Policy is incorporated into policies for other products. Google has a bewildering number of policies across its services, and many of them point to other policies or sets of policies that must also be obeyed.

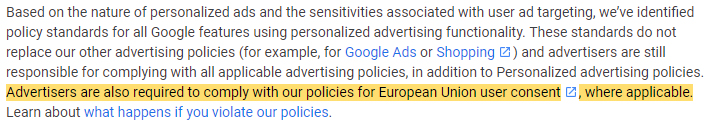

For example, the EU User Consent Policy is incorporated into the policy of any product involving personalized advertising, such as Google Ads:

Based on the nature of personalized ads and the sensitivities associated with user ad targeting, we've identified policy standards for all Google features using personalized advertising functionality. These standards do not replace our other advertising policies (for example, for Google Ads or Shopping) and advertisers are still responsible for complying with all applicable advertising policies, in addition to Personalized advertising policies. Advertisers are also required to comply with our policies for European Union user consent, where applicable. Learn about what happens if you violate our policies.

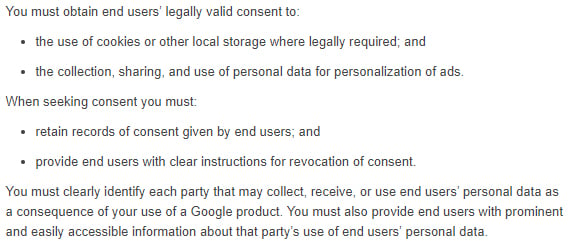

Google also explains (broadly) how its customers must obey EU law:

You must obtain end users' legally valid consent to:

- the use of cookies or other local storage where legally required; and

- the collection, sharing, and use of personal data for personalization of ads.

When seeking consent you must:

- retain records of consent given by end users; and

- provide end users with clear instructions for revocation of consent.

You must clearly identify each party that may collect, receive, or use end users' personal data as a consequence of your use of a Google product. You must also provide end users with prominent and easily accessible information about that party's use of end users' personal data.

We can read the section as a requirement to comply with the GDPR's conditions around consent, which are set out mainly in Article 7, and the requirement to provide transparent information, as set out mainly in Article 12.

Analytics

There are significant privacy implications inherent in using an analytics suite. The data gathered by analytics software can potentially be used to build a picture of a person's online habits. The ICO has published guidance which demonstrates that the use of web analytics falls firmly within the scope of the GDPR (at page 20 of linked PDF):

- Some types of big data analytics, such as profiling, can have intrusive effects on individuals.

- Organisations need to consider whether the use of personal data in big data applications is within people's reasonable expectations.

- The complexity of the methods of big data analysis, such as machine learning, can make it difficult for organisations to be transparent about the processing of personal data.

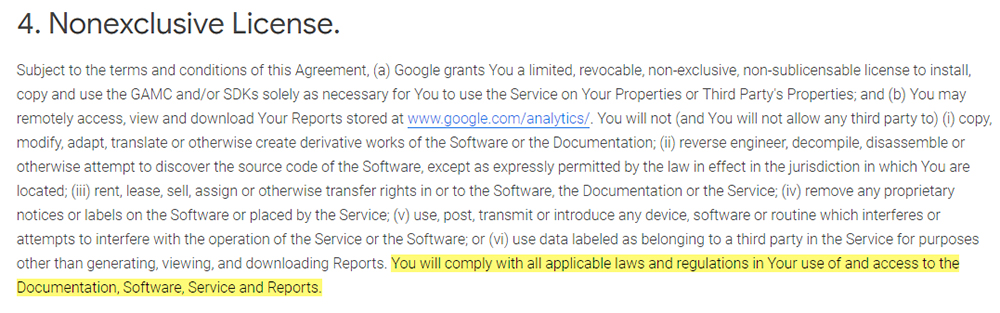

Google Analytics makes clear in its Terms of Service that legal compliance is a prerequisite of being granted a license for the use of its software:

4. Nonexclusive License.

Subject to the terms and conditions of this Agreement, (a) Google grants You a limited, revocable, non-exclusive, non-sublicensable license to install, copy and use the GAMC and/or SKDs solely as necessary for You to use the Service on Your Properties or Third Party's Properties; and (b) You may remotely access, view and download Your Reports stored at www.google.com/analytics/. You will not (and You will not allow any third party to) (i) copy, modify, adapt, translate or otherwise create derivative works of the Software or the Documentation; (ii) reverse engineer, decompile, disassemble or otherwise attempt to discover the source code of the Software, except as expressly permitted by the law in effect in the jurisdiction in which You are located; (iii) rent, lease, sell, assign or otherwise transfer rights in or to the Software, the Documentation or the Service; (iv) remove any proprietary notices or labels on the Software or placed by the Service; (v) use, post, transmit or introduce any device, software or routine which interferes or attempts to interfere with the operation of the Service or the Software; or (vi) use data labeled as belonging to a third party in the Service for purposes other than generating, viewing, and downloading Reports. You will comply with all applicable laws and regulations in Your use of and access to the Documentation, Software, Service and Reports.

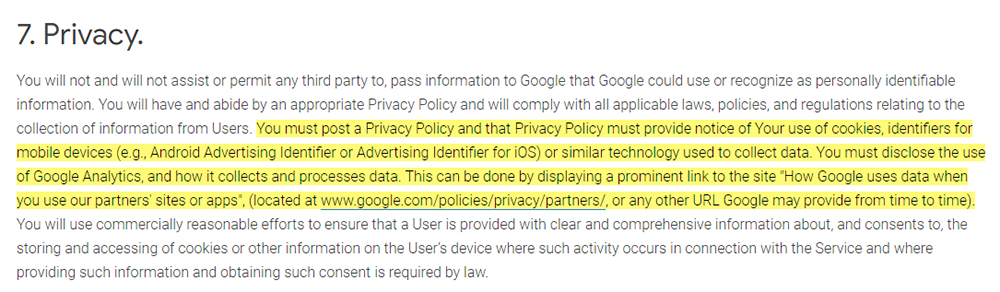

Google Analytics also requires its customers have a Privacy Policy that includes reference to its service, and that explains how Google Analytics processes personal data:

7. Privacy.

You will not and will not assist or permit any third party to, pass information to Google that Google could use or recognize as personally identifiable information. You will have and abide by an appropriate Privacy Policy and will comply with all applicable laws, policies, and regulations relating to the collection of information from Users. You must post a Privacy Policy and that Privacy Policy must provide notice of Your use of cookies, identifiers for mobile devices (e.g., Android Advertising Identifier or Advertising Identifier for iOS) or similar technology used to collect data. You must disclose the use of Google Analytics, and how it collects and processes data. This can be done by displaying a prominent link to the site "How Google uses data when you use our partners' sites or apps", (located at www.google.com/policies/privacy/partners/ , or any other URL Google may provide from time to time). You will use commercially reasonable efforts to ensure that a User is provided with clear and comprehensive information about, and consents to, the storing and accessing of cookies or other information on the User's device where such activity occurs in connection with the Service and where providing such information and obtaining such consent is required by law.

And take a look at the last few lines of that section, which require Google Analytics users to provide information about cookies, and gain consent for use of cookies, where required to do so by law.

EU law does require this in respect of the types of cookies set by Google Analytics. The ePrivacy Directive is more relevant here than the GDPR, but the GDPR requires compliance with the ePrivacy Directive.

Like many things, cookie consent is not explained clearly or explicitly in the GDPR, but here it is confirmed by the ICO:

You must tell people if you set cookies, and clearly explain what the cookies do and why. You must also get the user's consent. Consent must be actively and clearly given.

There is an exception for cookies that are essential to provide an online service at someone's request (eg to remember what's in their online basket, or to ensure security in online banking).

The same rules also apply if you use any other type of technology to store or gain access to information on someone's device.

So, as you can see, using Google Analytics requires compliance with the GDPR.

App Development Platforms

Where developers build an app using a third party platform, they'll have to abide by certain legal requirements set out by the provider. This will inevitably include compliance with any relevant privacy laws. Google Firebase is a commonly used app development platform for developing mobile apps for Android and iOS.

Like many other platforms, Google requires Firebase customers to agree to a Data Processing Agreement as part of its Terms. Here's an excerpt from the Firebase Data Processing and Security Terms:

12.2 Google's Processing Records. Customer acknowledges that Google is required under the GDPR to: (a) collect and maintain records of certain information, including the name and contact details of each processor and/or controller on behalf of which Google is acting and, where applicable, of such processor's or controller's local representative and data protection officer; and (b) make such information available to the supervisory authorities. Accordingly Customer will, where requested, provide such information to Google via the Admin Console or other means provided by Google, and will use the Admin Console or such other means to ensure that all information provided is kept accurate and up-to-date.

This clause makes reference to a data processor's obligations under Article 28 to keep records of processing activities and provide them to a Data Protection Authority when required.

The Data Processing Agreement also acknowledges that in certain contexts, Google or the developer might be either the data processor or data controller.

Whilst the Data Processing Agreement does make provision for either party to act as the data controller, this role will generally be fulfilled by a developer who creates an app in Firebase. This is explained in another document - Privacy and Security in Firebase:

Data Protection

Firebase is GDPR-ready

On May 25th, 2018, the EU General Data Protection Regulation (GDPR) replaces the 1995 EU Data Protection Directive. Google is committed to helping our customers succeed under the GDPR, whether they are large software companies or independent developers.

The GDPR imposes obligations on data controllers and data processors. Firebase customers typically act as the "data controller" for any personal data about their end-users they provide to Google in connection with their use of Firebase, and Google is, generally, a "data processor".

This means that data is under the customer's control. Controllers are responsible for obligations like fulfilling an individual's rights with respect to their personal data.

If you're a customer, and would like to understand your responsibilities as a data controller, you should familiarize yourself with the provisions of the GDPR, and check on your compliance plans.

Like some other Google services, Firebase requires any company with an EU Representative or Data Protection Officer (DPO) to register them with Google. This should be done in the Privacy Settings of the Firebase Console.

App Distribution Platforms

There's really no way around it - for a mobile app to have any significant reach, it will have to be hosted in either Apple or Google's marketplace platforms. This requires jumping through quite a few hoops.

For example, Google Play Store effectively requires GDPR compliance. Here's a section from the Google Play Developer Distribution Agreement:

4.8 You agree that if You make Your Products available through Google Play, You will protect the privacy and legal rights of users. If the users provide You with, or Your Product accesses or uses, usernames, passwords, or other login information or personal information, You agree to make the users aware that the information will be available to Your Product, and You agree to provide legally adequate privacy notice and protection for those users. Further, Your Product may only use that information for the limited purposes for which the user has given You permission to do so. If Your Product stores personal or sensitive information provided by users, You agree to do so securely and only for as long as it is needed. However, if the user has opted into a separate agreement with You that allows You or Your Product to store or use personal or sensitive information directly related to Your Product (not including other products or applications), then the terms of that separate agreement will govern Your use of such information. If the user provides Your Product with Google Account information, Your Product may only use that information to access the user's Google Account when, and for the limited purposes for which, the user has given You permission to do so.

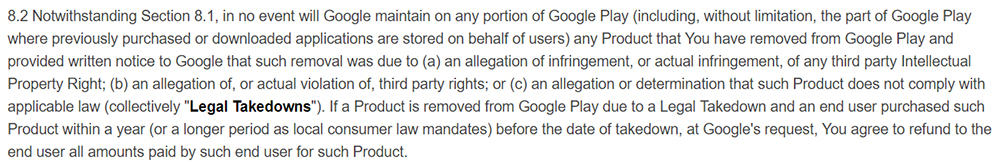

Here's another crucial section of this agreement:

8.2 Notwithstanding Section 8.1, in no event will Google maintain on any portion of Google Play (including, without limitation, the part of Google Play where previously purchased or downloaded applications are stored on behalf of users) any Product that You have removed from Google Play and provided written notice to Google that such removal was due to (a) an allegation of infringement, or actual infringement, of any third party Intellectual Property Right; (b) an allegation of, or actual violation of, third party rights; or (c) an allegation or determination that such Product does not comply with applicable law (collectively "Legal Takedowns"). If a Product is removed from Google Play due to a Legal Takedown and an end user purchased such Product within a year (or a longer period as local consumer law mandates) before the date of takedown, at Google's request, You agree to refund to the end user all amounts paid by such end user for such Product.

Google can take down an app from the Play Store where there has been even an allegation that it's legally non-compliant. The owner must then refund anyone who purchased the app in the past year. This could be a critical blow for any app developer and is yet another reason to ensure you can demonstrate GDPR-compliance.

Advertising Tools

Because they use potentially intrusive methods and technologies, such as cookies and remarketing, advertising tools like Google Ads, Google AdMob and MoPub require their users to be compliant with privacy laws.

For example, Google AdSense provides the Terms of Service for both Google AdMob (used by mobile app publishers to run ads on a mobile app) and AdSense (used by web publishers). The AdSense Terms of Use effectively requires developers to maintain GDPR compliance:

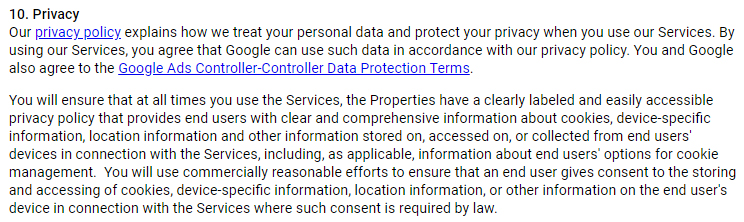

10. Privacy

Our privacy policy explains how we treat your personal data and protect your privacy when you use our Services. By using our Services, you agree that Google can use such data in accordance with our privacy policy. You and Google also agree to the Google Ads Controller-Controller Data Protection Terms.

You will ensure that at all times you use the Services, the Properties have a clearly labeled and easily accessible privacy policy that provides end users with clear and comprehensive information about cookies, device-specific information, location information and other information stored on, accessed on, or collected from end users' devices in connection with the Services, including, as applicable, information about end users' options for cookie management. You will use commercially reasonable efforts to ensure that an end user gives consent to the storing and accessing of cookies, device-specific information, location information, or other information on the end user's device in connection with the Services where such consent is required by law.

Here the developer must obtain consent to collect various types of personal data such as cookie data, where required to do so by law. As we've seen above, this is necessary under the GDPR.

Email Marketing Services

Using third-party email marketing services like MailChimp is a good way to help ensure GDPR-compliance. Because many businesses see privacy and anti-spam legislation as such a minefield, companies such as MailChimp depend on being able to offer legally compliant marketing services.

This is not to say, however, that outsourcing email campaigns excuses a business from complying with the GDPR. This is made clear by MailChimp in its terms:

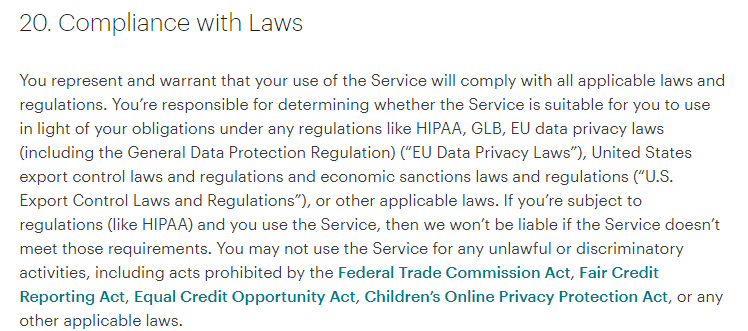

20. Compliance with Laws

You represent and warrant that your use of the Service will comply with all applicable laws and regulations. You're responsible for determining whether the Service is suitable for you to use in light of your obligations under any regulations like HIPAA, GLB, EU data privacy laws (including the General Data Protection Regulation) ("EU Data Privacy Laws"), United States export control laws and regulations and economic sanctions laws and regulations ("U.S. Export Control Laws and Regulations"), or other applicable laws. If you're subject to regulations (like HIPAA) and you use the Service, then we won't be liable if the Service doesn't meet those requirements. You may not use the Service for any unlawful or discriminatory activities, including acts prohibited by the Federal Trade Commission Act, Fair Credit Reporting Act, Equal Credit Opportunity Act, Children's Online Privacy Protection Act, or other laws that apply to commerce.

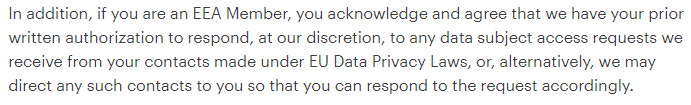

MailChimp's terms also include some clauses that are required in a Data Processing Agreement:

In addition, if you are an EEA Member, you acknowledge and agree that we have your prior written authorization to respond, at our discretion, to any data subject access requests we receive from your contacts made under EU Data Privacy Laws, or, alternatively, we may direct any such contacts to you so that you can respond to the request accordingly.

By agreeing to this clause, a business is authorizing MailChimp to provide its customers with any personal data it holds about them on receipt of a subject access request. This is unusual for a data processor, which would normally supply the information to the data controller to provide to the customer directly. There's no need for the business to worry about this, of course, so long as it's operating in a transparent and GDPR-compliant way.

Lead Generation

Lead generation and inbound marketing services, such as Hubspot and CrazyEgg, also require their users to abide by privacy law.

Hubspot specifies that its customers must obtain consent for processing under the GDPR in its Terms of Service:

For customers that are located in the European Union or the European Economic Area, the Standard Contractual Clauses adopted by the European Commission, attached to the Data Processing Agreement, with HubSpot, Inc., which provide adequate safeguards with respect to the personal data processed by us under this Agreement and pursuant to the provisions of our Data Processing Agreement apply. You acknowledge in all cases that HubSpot acts as the data processor of Customer Data and you are the data controller of Customer Data under applicable data protection regulations in the European Union and European Economic Area. Customer will obtain and maintain any required consents necessary to permit the processing of Customer Data under this Agreement. If you are subject to the GDPR you understand that if you give an integration provider access to your HubSpot account, you serve as the data controller of such information and the integration provider serves as the data processor for the purposes of those data laws and regulations that apply to you. In no case are such integration providers our sub-processors.

You can see that Hubspot also uses its terms to navigate the complicated relationship between itself, its customers, and providers for whom it provides CRM integration such as Salesforce.

An understanding of the relevant GDPR provisions is essential in order to meaningfully agree to these sorts of terms.

Enterprise Mobility Management

Android is a common choice of platform for businesses that wish to develop custom software or hardware to be used as part of their operations.

Android Enterprise supports developers using Android in the workplace, specifically in the context of enterprise mobility management (EMM). Developers might use Android Enterprise resources in the development of employee-owned devices to be used by employees at work (BYOD), or company-owned devices to be used by staff.

Pitney Bowes is an example of a company that uses Android Enterprise resources to develop its own devices - a range of office equipment.

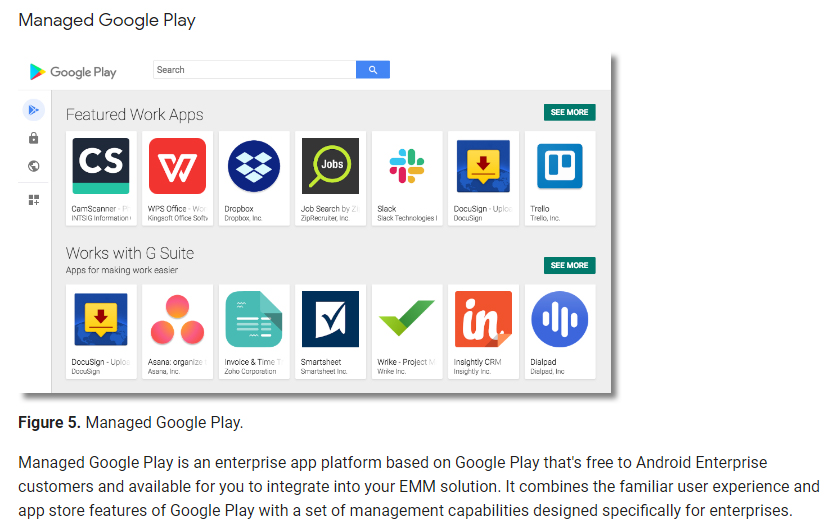

In the EMM context, Android Enterprise provides application programming interfaces (APIs) such as Managed Google Play:

Complying with the GDPR is required in order to use Android Enterprise. Google sets out some recommendations on how to comply with the GDPR when using the service:

Recommendations

As a current or future customer or partner of Android, you need to ensure that your implementation is prepared for the GDPR. Consider the following:

- Familiarize yourself with the provisions of the regulation, particularly how they may differ from any previous data protection obligations. Be aware that new requirements may require new agreements with service providers or completely new solutions to meet the stringent requirements ahead.

- How does your organization ensure user transparency and control around data use?

- Are you sure that your organization has the right consents in place where these are needed under the GDPR?

- Does your organization have the right systems to record user preferences and consents?

- How might you demonstrate to regulators and partners that you meet the principles of the GDPR and are an accountable organization?

Mobile devices typically process a lot of personal data. Developing a device that uses Android as its OS will require careful consideration of the associated privacy implications.

Even devices running "stock" Android collect personal data. Google states that the Android OS itself, "in so far as it is executed exclusively within the mobile device," does not send personal data to Google. However, several apps which come in the "factory" (default) version of Android do.

In respect of some of these apps, Google is the data controller:

- Google Play Services

- Google apps on Android (e.g. Google Chrome, Google Search)

- Zero-touch enrollment (for corporate-owned devices)

For others, Google is the data processor:

- Managed Google Play

- Android Management API

- Zero-touch reseller

This is relevant to developers in several ways.

A data controller that has developed an EMM device using Android Enterprise will need to be explicit in its Privacy Policy about the relationship between its data subjects (e.g. its employees) and Google.

Google is acting as a data processor in some respects, and so it is also necessary for a company using Android Enterprise to have a Data Processing Agreement in place with Google. The Android Enterprise Data Processing and Security Terms takes care of this.

Note that under Article 28 (1), the data controller is obliged to ensure that any data processors it contracts are compliant with the GDPR. There are no exceptions to this, even where Google is the data processor. This will require a thorough reading of this Data Processing Agreement, as the buck stops with the data controller in the event of a data breach.

Voice Activation

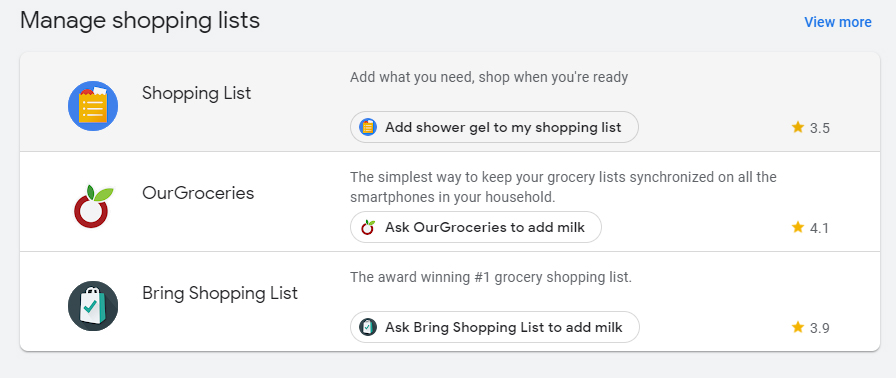

Many opportunities are emerging for developers to integrate their software with voice-activated services like Google Assistant, Apple's Siri, or Amazon Alexa.

Through Actions on Google, developers can have their Android app interact with Google Assistant. By publishing an Action through Actions on Google, developers can enable their users to "talk" to their app or device via Google Assistant.

Here are some examples of apps that have published Actions for Google Assistant via Actions on Google:

There are a number of privacy considerations inherent to voice-command apps and devices. So it's not surprising that Google requires developers to have a Privacy Policy before publishing an Action:

Why we require a privacy policy

Privacy disclosures -- made via a privacy policy and in-Action conversations -- help users understand what data you collect, why you collect it, and what you do with it. The disclosures should be comprehensive, accurate, and easy to understand by users. Users will have an opportunity to review the policy when they browse actions in the Directory, and we encourage developers to make it available on their website and other convenient places.

Actions on Google expects developers' Privacy Policies to, at a minimum, address these three questions:

What information do you collect?

In your policy you should disclose all the information your Action collects. This includes information that you may collect automatically, such as server and HTTP logs, data transmitted by the Actions on Google API to you, and usage information. This also includes information that you get from the user, either directly or via the permissions API. You should also disclose whether you collect any persistent identifiers (like the Google ID).

How do you use the information?

In your policy, you should disclose how you use the information you collect. For example, you may use the information to provide certain services to users, to recognize them the next time they use your Action, or to send them promotional emails.

What information do you share?

In your policy, you should disclose the circumstances when you share information. For example, you may share information with third parties as part of the service (like a restaurant reservation Action), with other users (like a social network or forum), with marketing partners, or with service providers that assist with your service (like hosting companies or technology platforms).

Google doesn't specifically refer to the GDPR in its Actions on Google Privacy Policy guidance. But Google is asking developers to provide very similar information as that which is required under Article 13 of the GDPR.

First Steps to GDPR Compliance

Now that you are up to speed on the core concepts and major changes brought about by the GDPR, what's the next step? The answer to this question isn't the same for everyone, but let's take a look at what you might do next in your journey to GDPR compliance.

Under the GDPR, you are required to have a legal basis for collecting and processing the personal information of your data subjects. You should determine which legal basis you will use when collecting and processing personal information, and be prepared to defend this decision if it is challenged.

New Projects

If you are a new company or starting a new project with GDPR compliance as one of your goals, you have a fresh slate and can hit the ground running with a good understanding of these new regulations.

Are you the data controller or data processor?

One of the first items you must address is determining whether you are the data controller, data processor, or both.

Simply put, the data controller is the one who determines how personal data is collected, why it is collected, and what is done with it afterwards. The data processor simply uses data to complete a task dictated by the data controller. In many cases, the data controller is also the data processor, collecting personal data for certain purposes and then also processing it to achieve those tasks.

You may wish to review Chapter 3 of this ebook, as well as reread Article 24: Responsibility of the controller and Article 28: Processor to help you answer this question.

Privacy by Design

Privacy by Design is a concept that the GDPR has adopted as a requirement to ensure that data handlers consider the privacy and security of their data subjects every step of the way. In essence, privacy by design expects developers to have security baked into their projects before those projects even begin.

In the earliest stages of conception and planning, the GDPR expects developers to be mindful of how their systems and processes are designed to protect against data breaches, ensure that personal data is only collected and kept as needed, and is processed securely and lawfully so that there is no risk to their data subjects.

The alternative to this concept is developers creating an app or website and then tacking on security measures at the end of the project. This is not only inefficient, but often results in vulnerabilities or oversights that can be exploited intentionally or may fail on their own, putting the privacy of data subjects at risk.

Here are some things to keep in mind when beginning a new project with privacy by design:

- Use pseudonymization where reasonable to add an extra layer of security and privacy

- Give access to the personal information you control only to those who need it, and only the information they need to fulfill their tasks (including third-parties and data processors)

- Include all necessary declarations, rights, and options for your data subjects in your Privacy Policy so they can make informed decisions

- Use encryption to protect data when transferred or otherwise vulnerable

- Make use of sufficiently strong passwords (never use default passwords or simple ones such as "1234")

- Ensure internal employees and outside consultants are properly trained in your security procedures and their responsibilities under the GDPR

- Have a contract in place for data processors and third-party vendors you use so everyone understands their roles and expectations

- Have a plan for data breaches, subject access requests, and other security scenarios

- Ensure all of your software, firmware, and hardware have modern security measures (security suites, OS updates, firewalls, spam blockers, etc.)

By being mindful of these key areas of your development and considering security in other aspects of your project and business as a whole, you can minimize risk to yourself and your data subjects.

The Benefits of Starting Fresh

New projects and startups have the distinct advantage of prior knowledge about the GDPR before they even begin. As opposed to long-running companies who may now need to make drastic changes to their operations in order to comply with the GDPR, you can start off on the right foot with a strong foundation and guidelines to help you toward success.

With that foundation in place, it's up to you to decide the best course to take for your project.

What data will you collect and process? What legal basis will you use to do so? When will you ask for consent?

A good place to start is drafting a Privacy Policy which you can then use along with the GDPR as an outline for your operations. This will help you see where your strongpoints are, where you may need to make more efforts towards compliance and make it clear what your actual procedures are.

Updating Current Projects

Updating current projects in response to the GDPR can be more complicated as the rules you started the project with now vary from the rules you must abide by under the GDPR.

While no two projects are the same, there are some steps that should be taken when converting a current project to meet GDPR requirements.

Purge Non-Essential Data

As mentioned in the last chapter, retaining unneeded personal data goes against GDPR compliance and opens you and your users to unnecessary risk. One of the first steps that should be taken when converting a project to GDPR compliance is to delete or anonymize any information that is no longer needed.

Purging your systems and databases of extraneous personal information is not only required under the GDPR, but makes setting up adequate security measures much more manageable.

Instead of needing to create or modify security measures to protect the information you are retaining, you can instead focus on developing security measures for the data you collect moving forward, and need not worry about the collection methods and security measures you began the project with as the old data has been erased or converted.

Deduplicate Data

A related step you should take is deduplicating personal data that is redundant and not needed in more than one location. This not only allows you to focus your security efforts in one area, but reduces the risk of oversights where copies or backups of your database may be left vulnerable.

While not explicitly called out in the GDPR, redundant information goes against the security pillar of the GDPR where data should only be collected, processed, and retained as needed.

Duplicate data could be seen as "not needed" and therefore it should not be retained.

Deduplication might also come into play if you are developing multiple iterations of the same project (for example, a GDPR-compliant and not GDPR-compliant version). While retaining a backup of your database may be necessary at some points in the process, your old version of the project should be cleansed of personal data once you transition to the new version. Allowing an old version of an app or website to exist with sensitive data attached is an unnecessary risk.

Limit Data Access

One of the ways that duplicate data can put you and your data subjects at risk has to do with access.

Say you are creating a new GDPR-compliant version of you website and you still have the old website as a backup and for reference. You might update who has access to the new website's database according to GDPR guidelines (third-parties, current and former employees, etc.). If you do not also update access to the old website, former employees and third-parties you no longer work with may be able to access your database. This could put the personal data you control at risk.

By removing duplicate data and properly limiting access to the data you control, you can ensure that only those with authorization to do so can access your database.

The GDPR states that only those necessary should have access to the personal data in your database. Without limiting access to the personal data that you control, you make yourself vulnerable in two ways.

First, more people than necessary can access and potentially mishandle the personal information under your protection. Secondly, in the event of mishandling or a data breach, it is much more difficult to determine who is responsible.

Limiting who has access to your database reduces the avenues for mishandling and also make it easier to determine the points where mishandling may have occurred to pinpoint issues and remedy any vulnerabilities.

Section 4 of Article 6 notes some factors that should be weighed in situations where you have data you've collected for one purpose and wish to process it in a different way, and:

- You don't have consent to do so, or

- The data isn't being processed according to a Union or Member State law

These factors include the following:

- Links between the original purpose for which the personal data was collected and the purposes of the further processing,

- The context that the personal data was collected under, particularly looking at the relationship between the data subjects and the collector,

- What kind of personal data is it, and in particular whether it's special categories of personal data or personal data related to criminal convictions and offences,

- Any possible consequences the data subjects may endure because of the further processing, and

- Whether appropriate safeguards exist to protect the data, such as encryption or pseudonymisation

Basically, if you wish to use data beyond what you've collected it for and made clear to your users was your purpose for collecting it, you'll need compelling reasons and you can't put your users at risk for having their data compromised or abused.

Your Privacy Policy

Review your current Privacy Policy and see if it includes everything it needs to be up to date and accurate. Also be sure that your Privacy Policy is written in a way that it is easily understandable to your average user.

Consider the examples below:

Example #1:

Example #2:

As you can see, the verbiage in example #1 is much more casual and conversational, while the verbiage in example #2 is full of cumbersome legalese. If your Privacy Policy reads more like example #2, you should rewrite it in more natural language so your users can easily digest the information you disclose there.

Consent Checkboxes

Affirmative consent is one of the most noticeable changes brought about by the GDPR, one that you have almost certainly encountered by now.

Many privacy laws prior to the GDPR required consent for certain data collection and processing activities, but this was rarely spelled out specifically.

App and website users would be considered to be agreeing to legal agreements by default if they chose to use the service, whether or not they even read the policies that they were agreeing to. While this became an acceptable practice for several years, the GDPR saw this as a serious fault.

The GDPR clarified that active consent must be given along with other stipulations for fair and legal data collection and processing. Active consent requires the user to interact in some way (typically by checking a box or clicking a button that reads "I Accept" or something equally clear) in order to ensure that they agree to the policies of that app or website.

While users may still often choose to not read the policies they are agreeing to, they are at least required to confirm their consent and cannot simply be found to be in an agreement unknowingly.

The GDPR calls for "affirmative consent" in a number of situations and specifies that passive consent is no longer sufficient.

Checkboxes used to obtain affirmative consent must not be checked by default, as the user must be required to take an action to give their consent. This eliminates the chance of a user not noticing a passive popup and being unaware that they have consented to something.

Here are some locations where placing a checkbox to obtain consent is a good idea:

- A popup for new visitors asking for their consent to collect and process their data according to your Privacy Policy (which you should include a link to). You may also ask them to agree to your Terms & Conditions and Cookie Policy in this popup.

- Any processing activity that requires consent should have its own separate checkbox. You should specify when a processing activity is mandatory or where lack of consent may affect the functionality of your app or website (location services, for example).

- If you are a data controller who relies on legitimate interest for certain processes, or a data processor, you may want to disclose that.

- Pages where the user fills out personal information, such as when creating an account.

- Forms for entering a contest or sweepstakes, you may also include the conditions for entry.

- The payment screen where users provide payment and shipping information.

These locations are just some common examples of where developers should include a checkbox for affirmative consent under the GDPR.

The GDPR also gives data subjects the right to revoke their consent at any time. It is best practice to notify users at the time of consent that this is an option and where they can do it (usually in settings or in their profile).

When consent is not needed because legitimate interest is used as the legal basis, this should be disclosed and users should be notified of their right to object as per Article 21 of the GDPR.

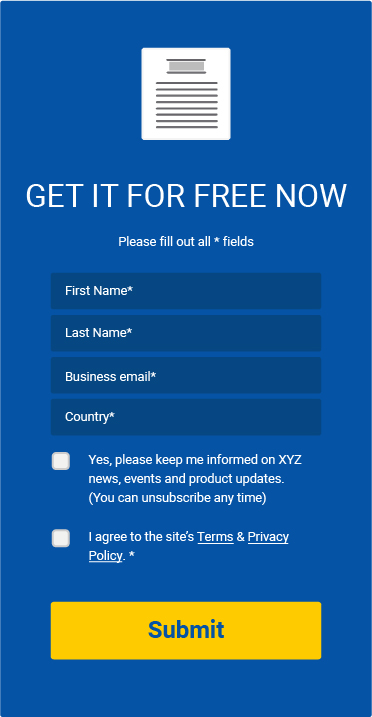

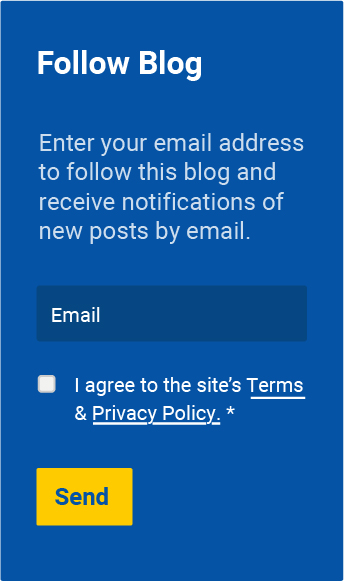

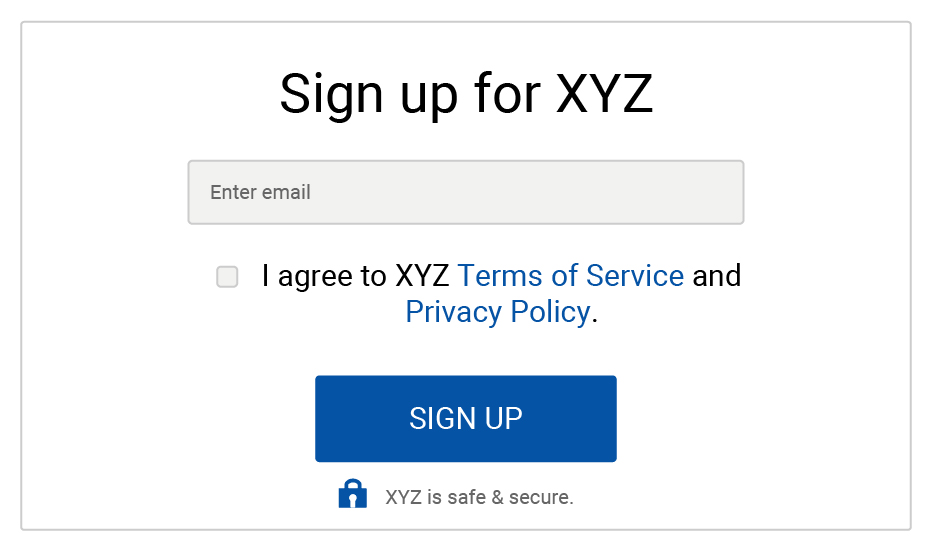

Below are some examples of consent checkboxes:

While these examples are from mobile apps, the same concept applies whether it's an app or a website requesting consent.

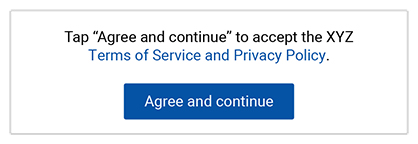

The old way of obtaining consent prior to the GDPR often included a popup that stated that by using the website, you agree to their Privacy Policy and Terms & Conditions. This sort of passive consent is no longer permissible under the GDPR. The GDPR requires affirmative, active consent. This can be in the form of a checkbox or button that must be clicked to give consent.

Example #1:

Example #2:

Example #3:

Example #1 is the old way of obtaining passive consent. This method is no longer viable under the GDPR.

Example #2 and #3 are the new way of obtaining active consent. Note that in example #2, the checkbox must not be pre-checked, requiring the user to click the box to give their consent.

Other Responsibilities

Chapter 3 includes lists of responsibilities for both data controllers and data processors. By this point you should know which one you are, so you should review your responsibilities to ensure you are meeting all of these expectations.

Once again, it would be impossible and redundant for this ebook to cover every aspect of the GDPR as it continues to grow, change, and receive clarifications. The purpose of this ebook is not to replace the need to read the GDPR, but instead to help you understand it by providing examples and breakdowns of the information it provides. It is your responsibility as a data handler to read the entirety of the GDPR and keep up to date with the laws therein as they evolve, change and become more defined through real-life applications.

Note From the Editors

With more and more privacy laws popping up all the time and existing privacy laws being modernized and updated all around the world, it can seem daunting to keep up with what it takes to stay compliant.

We created this ebook to help you navigate the groundbreaking GDPR a little bit easier and take some of the stress and mystery out of compliance. We hope it helped explain some of the philosophy behind the law, demystified some of its requirements and provided you with some practical steps you can take to meet its mandates.

At the time of writing this, the GDPR is the most strict global privacy law in existence. Moving forward, privacy laws around the world will likely be modeled after the GDPR. This means that if you understand the GDPR and become compliant with it, you'll be in a really good position to comply with other global privacy laws, both now and in the future.

Thank you for trusting us to provide you with informative, useful content and guidance. We hope this and our other resources will help your business, blog or website stay compliant and thrive.